The Challenge

Google is banking a lot on AI and machine learning as vanguards of the future. In early 2018 Google already offered a bunch of AI tools for developers and data scientists, but no “on-device” machine learning models. On-device models are arguably the most efficient and useful option for mobile applications, especially for the “next 1B users” who typically have lower network capability. Adding out-of-the-box on-device models seemed like a win-win, but where and when?

Why not Firebase? Firebase is Google’s mobile development platform with over 1 million users, from programming students and hobbyists to large enterprise customers. I joined the Firebase team in 2017 and was approached a year later to help with the ambitious launch of ML Kit for Firebase. Our hypothesis was that your average mobile developer avoids machine learning because they think there is a high learning curve for implementation, even for simple on-device tools like text recognition (OCR). So in addition to the goal of adding on-device models, the question became:

“How can Firebase make machine learning tools more accessible for mobile developers?”

Design process

With just five months to launch a brand new feature for I/O, we immediately jumped into conceptual user research to gather broad understanding of the ML space. How did our developers think about AI and machine learning? One of the biggest challenges of this project was bringing together disparate teams from Firebase, Android, Mobile Vision and TensorFlow. The findings from this research were an early unifying moment for the ML Kit team and its new vision.

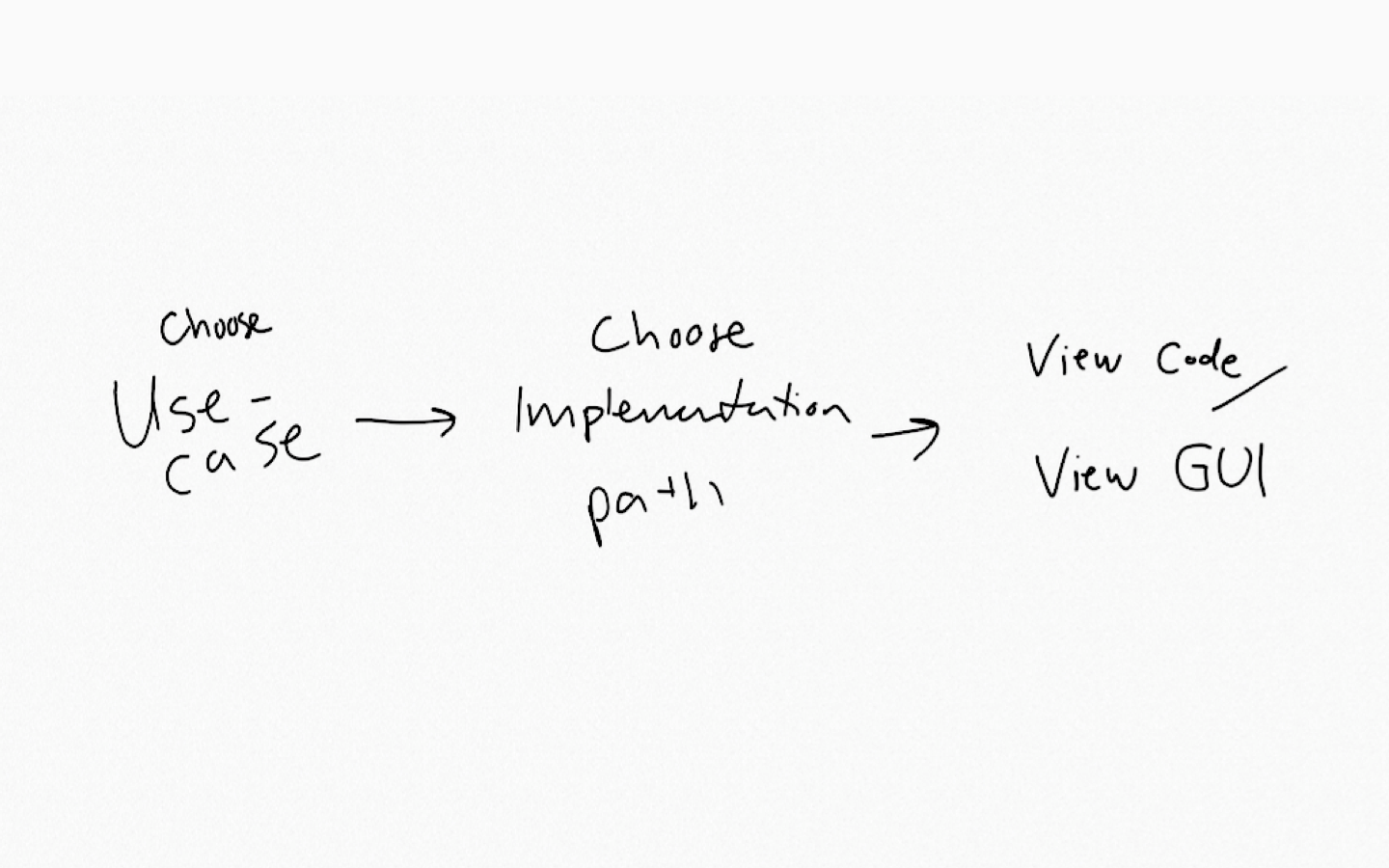

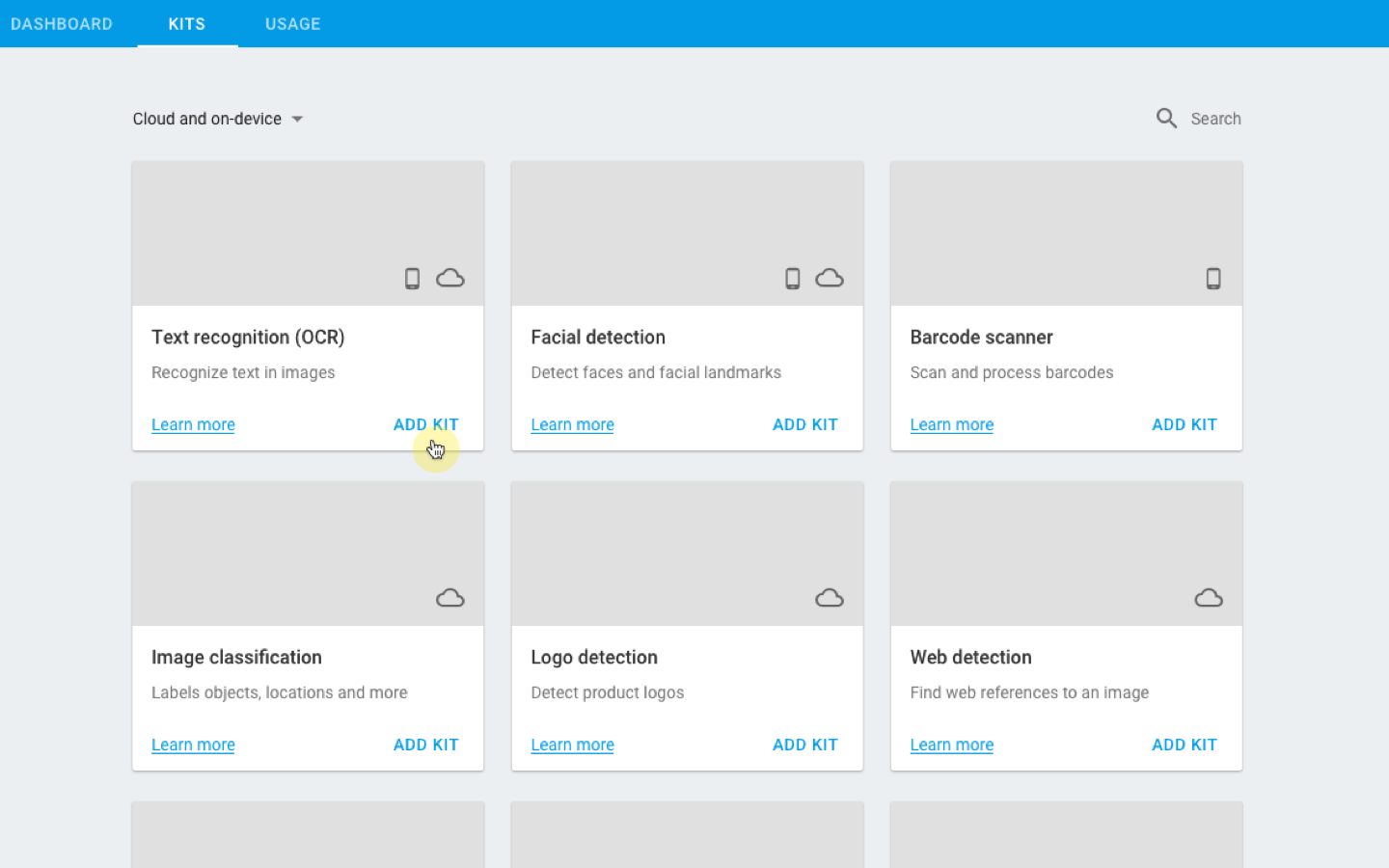

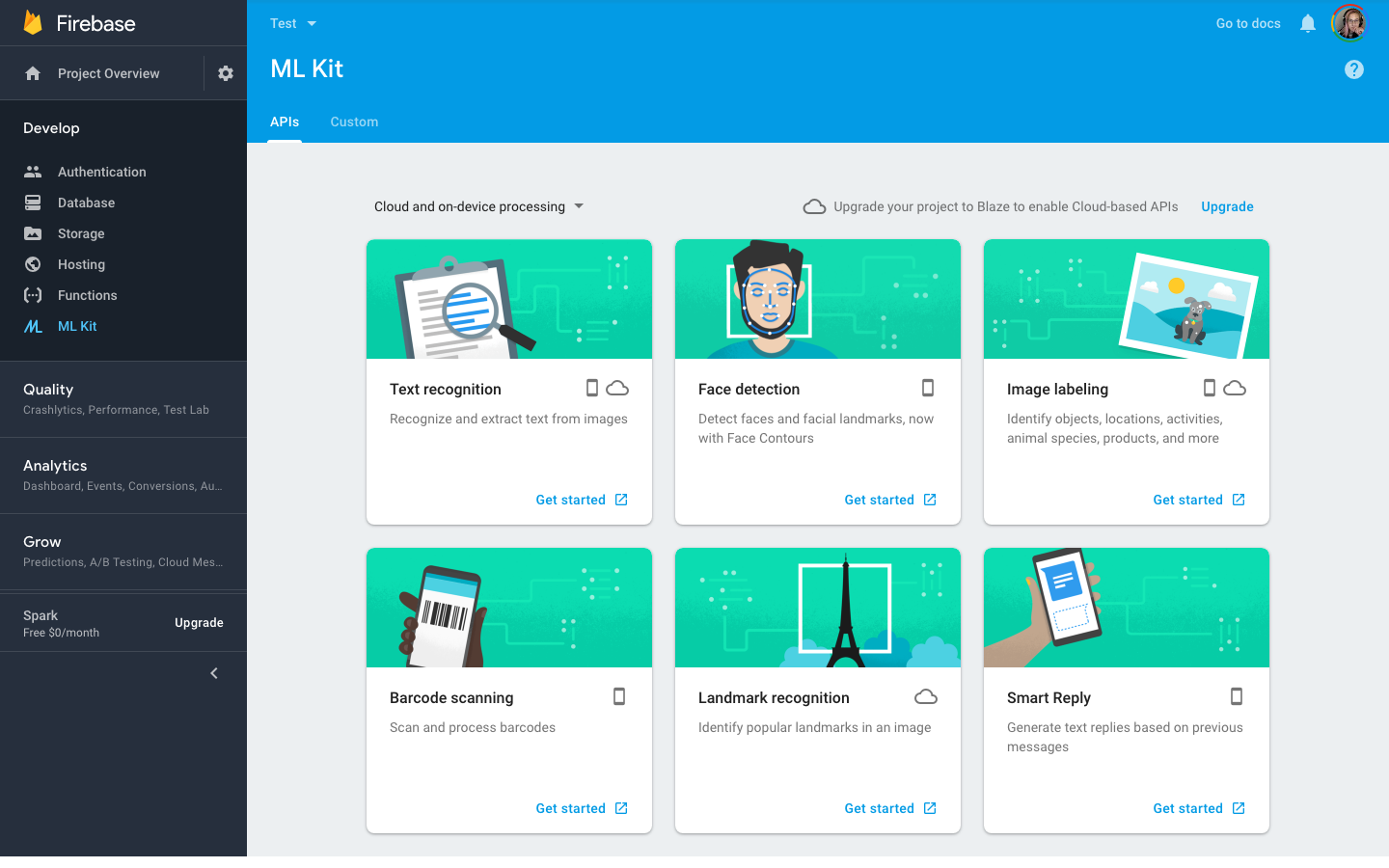

From exploratory designs and user journeys, it was clear that the main tension was between the Firebase console and its developer docs. How does one visualize an SDK? There was much back-and-forth on where the implementation should happen, but ultimately we landed on a visual landing page and analytics in the console and guided implementation of the SDK in the developer docs. I brought the designs through numerous peer reviews in tandem with usability testing to validate the end-to-end flow with a wide range of Firebase users. As well, being a part of such a public launch meant working with illustrators and technical writers to polish my work before the big day.

MVP Launch!

In less than 5 months we got ML Kit out to developers at a critical time for Google’s AI initiative. In the months that followed, we gathered product and market feedback to build a long term product roadmap. In addition to flushing out support for custom Tflite model serving and compression, I assisted with the conceptual design phases of the recently launched Firebase AutoML before departing Google at the end of 2018.

Check out ML Kit on the Google Developers site or try it for yourself in the Firebase console.